Chatbots using Seq2Seq model with Keras in Python

By Viraj Nayak

In this project, we will implement the seq2se2 and create chatbots by training the seq2seq model on three datasets.

In this project, we will use three datasets to train our Seq2Seq model. Seq2Seq is a type of Encoder-Decoder model using RNN and consists of two components — an encoder and a decoder. Seq2Seq can be used for machine interaction, language translation and speech recognition.

Both encoder and the decoder are LSTM models in our model implementation (but in general can sometimes GRU models). Encoder reads in the input sequence and summarizes the information internal state vectors. The outputs of the encoder are discarded. We only preserve the internal states as it aims to encapsulate the information for all input elements to help the decoder make accurate predictions. The decoder is an LSTM with its initial states vectors initialized to the final states of the Encoder LSTM, using which the decoder starts generating the output sequence, and considers these outputs to generate future outputs.

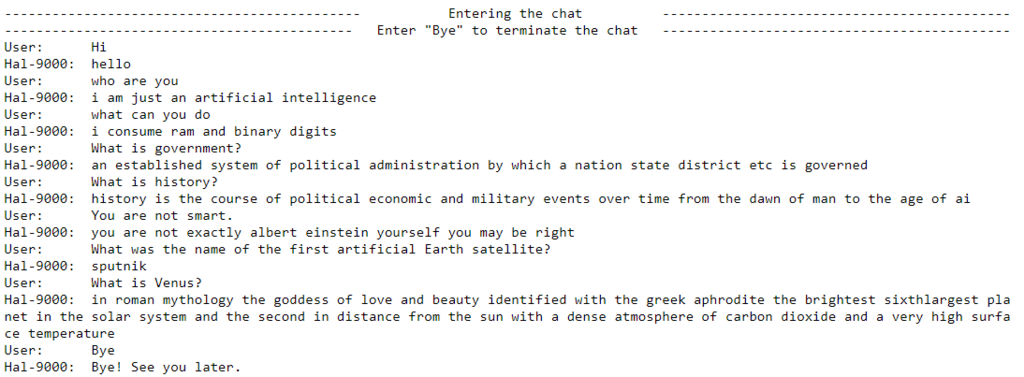

Below are examples of conversations with chatbots created in this project:

1. Chatbot trained on dialogs.txt:

2. Chatbot trained on chatterbot_corpus dataset:

3. Chatbot trained on Movie_dialog_corpus:

The libraries used across the project:

- numpy

- pandas

- yaml

- matplotlib

- seaborn

- re

- tensorflow

- keras

Submitted by Viraj Nayak (nayakviraj21)

Download packets of source code on Coders Packet

Comments